The only logs i see is from my python django app that requests to payment gateway service got rejected (timeout) because cannot connect to proxy

except requests.exceptions.ConnectTimeout as e: raise HTTPException(status_code=502, detail=f"Proxy connection timeout: {str(e)}")

the thing is only happen very very randomly sometimes once a month but sometimes full month is okay

i create custom grafana monitoring in may because i want to monitor this issue

what usually happen is

I woke up

check my message 50+users complain in our whatsapp group that they cannot withdraw their money from our site

check the logs

says proxy timeout

try to restart the squid server

no luck

1 hour later suddenly working again

this already happen like 5-8 time already since last year we migrate to fly

the issue was today the withdraw service down for like 8 hour 3-AM Jakarta - 12 AM Jakarta

and then recover again but with low error rate (some user still got the error but most are fine)

but during 15:00 / 3PM Jakarta time the error occur again

an today 6 PM jakarta time while i build the reproduce. everything is fine again

currently my mitigation is i increase the proxy memory which currently only had 512mb of ram because i saw some spike on the memory which im not sure why

this is my squid config

`# Minimal permissive Squid forward proxy

WARNING: This is an open proxy configuration. Restrict access before exposing publicly.

Listen on port 8080

http_port 3128

Standard ACLs for allowed ports

acl SSL_ports port 443

acl Safe_ports port 80 # http

acl Safe_ports port 21 # ftp

acl Safe_ports port 443 # https

acl Safe_ports port 70 # gopher

acl Safe_ports port 210 # wais

acl Safe_ports port 1025-65535 # unregistered ports

acl Safe_ports port 280 # http-mgmt

acl Safe_ports port 488 # gss-http

acl Safe_ports port 591 # filemaker

acl Safe_ports port 777 # multiling http

acl CONNECT method CONNECT

Allow everything (OPEN PROXY). Harden before production use.

http_access allow all

Optional: Disable on-disk caching

cache deny all

cache_mem 0 MB

maximum_object_size 0 KB

cache_dir null /tmp

DNS and forwarding behavior

dns_v4_first on

forwarded_for on

via off

Keep Squid quiet-ish

shutdown_lifetime 1 seconds`

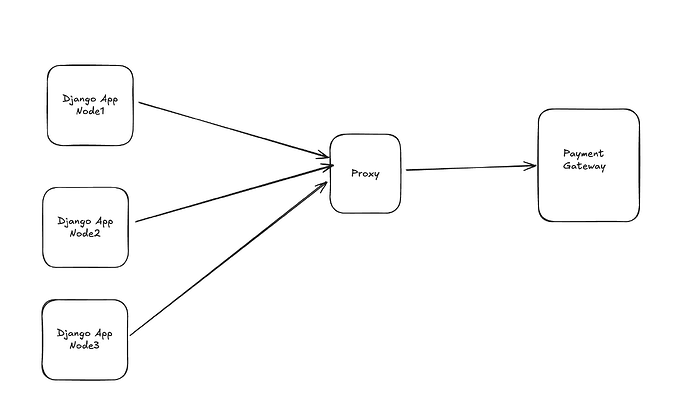

and the architech is look like this

the reason i need to use proxy is because the payment gateway only allow me to register limited number of ips (4 max i think)

since i know most of our users (just small apps with thousand of users)

i just tell them okay wait lemme fix this (doing absolutely nothing other than restart and pray) and ask them to use the feature again after couple of hour

my guess is the ram but spending another 10$/mo for ram on proxy server little bit ….